Design with DynamoDB Streams to avoid distributed transactions in Lambda

December 15, 2020

I’ve written before about using DynamoDB Streams to aggregate data in near real-time. Today let’s look at another great use case: Safe handling of multiple operations without the complexity of distributed transactions.

In the typical RESTful API we synchronously insert and update data in our Lambda functions on client POST or PUT requests. And quite often we need to perform other actions as well, such as publishing the incoming business event to EventBridge or queuing backgroud tasks in SQS. But in such case invoking multiple downstream services within a single function is effectively a distributed transaction.

Here’s an example: In a restaurant management system a customer wants to create a reservation. We would persist the seating entity in DynamoDB and then notify the restaurant staff (here simplified to sending an email) so they can review and either accept or decline the reservation.

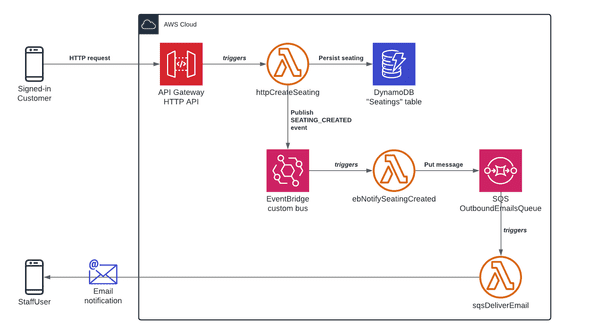

Given that we want to use EventBridge to allow other services to act on core business events there’s a few ways we could implement this. With an optimistic approach we might resort to letting the API Gateway-triggered Lambda perform both the DynamoDB put and EventBridge putEvents operations, which would look like this:

But when we invoke multiple downstream services in a Lambda function without implementing a strategy for handling partial failure we risk bringing our system into an invalid state. In this case the function is triggered synchronously so the outcome of a partial failure will depend on how error handling is implemented in the client app. Maybe the seating will be created but staff not notified, or maybe a duplicate reservation would come to exist.

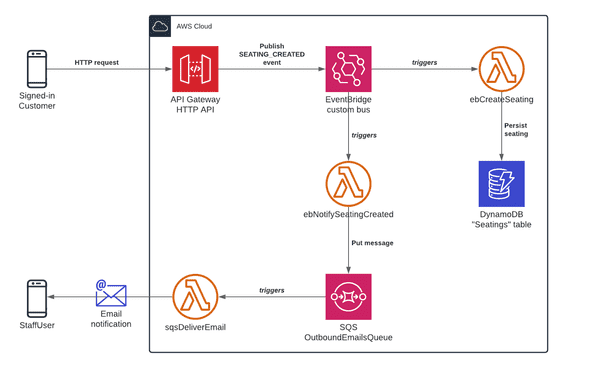

An alternative approach is to use HTTP APIs direct integration for EventBridge:

However it’s not a perfect fit for our use case because we must validate the payload at request time to ensure that only valid reservations are accepted by the system. Therefore this integration is mostly suitable for data ingestion scenarios.

Design with DynamoDB Streams

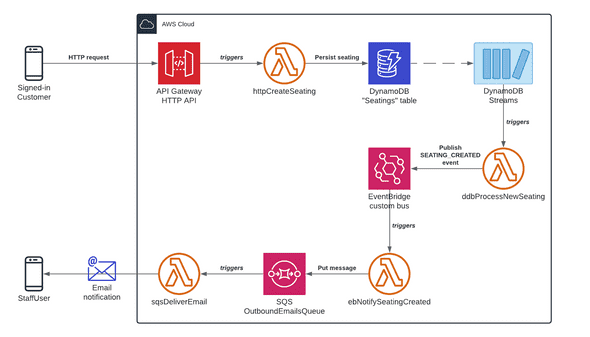

A great option here is to use DynamoDB Streams as depicted in the last diagram:

Whenever the incoming data is validated and persisted control is returned to the client, ensuring fast response times. The stream now kicks off background processing and having an intermediate Lambda function publish a nicely formatted business event to EventBridge makes it really simple to hook on to later - and still only that one stream-triggered function has to know about DynamoDB schema internals. In a smaller setup this function could also just do the required work itself.

The key thing here is that we’ve reached a design without distributed transactions. A design that initially leaves no room for partial failure and, should throttling or downtime occur when invoking downstream services as the flow progresses, is entirely backed by all of AWS’ built-in robustness.

Advantages

It’s a pattern that brings several advantages, such as:

- No distributed transactions as all functions are single-purpose

- Built-in retries (and, with Lambda Destinations, ability to DLQ messages after retry attempts are exhausted)

- Simpler design with small and focused functions that are easier to test

- A loosely coupled architecture that is easier to maintain and built out

- Works around the “two subscribers” recommendation for DynamoDB Streams (and EventBridge makes it effortless to subscribe no matter the amount)

- Fast response to clients as background processing kicks of asynchronously

Challenges

DynamoDB Streams’ retry-until-success behaviour makes us vulnerable to poison messages so it must be configured properly:

- On-failure destination: A DLQ for failed messages must be configured

- Retry attempts + Split batch on error: Use in tandem to home in on a poison message and have it discarded to ensure that stream processing will not block

Discarded messages go to the DLQ but without the message body. The body itself must be read from the stream before it expires (24 hours). Hence, alerting must also be in place.

Conclusion

DynamoDB Streams and EventBridge is a winner combination for avoiding distributed transactions whenever we need to update system state in multiple downstream services.